Pathway 2: Spotting and reporting hate online

In order to come up with ideas or solutions against hate speech, young people first need to understand the phenomenon, how it makes people feel and how we can deal with it and counter it. The SELMA Toolkit enables young people to handle hate speech both on a personal level, as part of their (peer) group and on a societal level. The latter aspect is essential when you are ambitious, and truly aim to change the world.

In this section, we identify a number of SELMA modules which directly touch upon the mechanisms of online hate, and how social media platform features come into play, while offering some alternative solutions and strategies also.

Please note that by no means we are trying to be restrictive; SELMA has been designed as an open and flexible framework which gives you as much flexibility as you want or need to build towards effective and efficient pathways of change. The SELMA journeys we have articulated below have been developed for illustrative purposes.

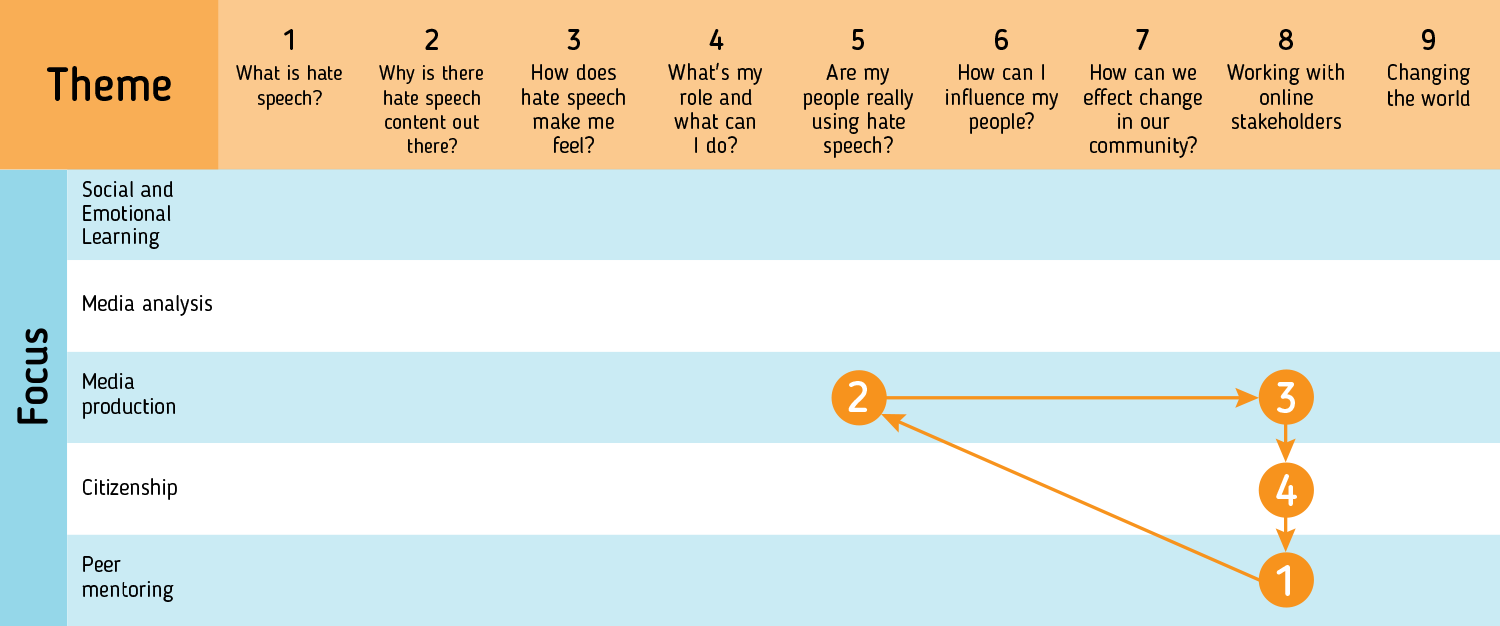

This pathway offers concrete tips to reduce hate speech online. It starts off with a reflection of what feeling ‘safe’ online means and which tools contribute to a safer internet. In a media production activity young people learn to categorise hateful content and understand the complexity and limitations of capturing hate speech. They are challenged to produce an algorithm which helps them to understand what an algorithm is and how it can be used to fight hate speech online. The next activity deals with community standards of social media companies and their reporting mechanisms. At the end of this pathway, they practice “the art of persuasion” to develop strategies on how to approach online stakeholders with their ideas.

More specifically:

- Step 1: In the ‘Creating a safe space online’ activity, which is part of the ‘Peer Mentoring’ focus in ‘Theme 8’, young people are encouraged to reflect on what online safety means to them and how a safe place could look like. They are asked to come up with their own ideas for dealing with online comments and think about community management. In doing so they will find out what is necessary to keep platforms safe and how to express criticism or disagreement in a constructive and responsible way.

- Step 2: The ‘Spotting hate: creating an algorithm’ ‘Media Production’ activity in ‘Theme 5’ challenges young people to come up with solutions to identify hate speech, categorise it and think about ways of removing it from social media platforms. The aim is for learners to create and test a hate speech algorithm with the help of online tools and test statements. This approach follows the SELMA principle of “hacking hate” to empower young people to help shaping (technological) solutions to stop online hate speech. This activity will help them to understand how an algorithm works but it might also be the starting point to think or develop new products that might contribute to solving the problem.

- Step 3: Once participants have a better understanding of the challenges social media providers face when trying to monitor, identify and remove hateful content, they could move to the ‘Media Production’ activity in ‘Theme 8’, which helps to better understand ‘Community standards’ and social network community guidelines, in order to find out what is acceptable or unacceptable behaviour. Participants will also consider the purpose of reporting tools, how they could be improved to help combat hate speech, and how young people can educate their peer community in this regards. In the Questions to Ask section, you will also find more specific questions to start a more elaborate discussion with young people about reporting tools and processes. The main activity looks at Facebook’s reporting process and how the platform handles reports. Young people will also learn to decide if and when reporting is the right action and how they can help others to report hateful content online. If you have time you might also want to look at and compare with community standards and reporting mechanisms from other social media providers.

- Step 4: The last unit of this pathway is ‘The art of persuasion’. This is a ‘Theme 8’ ‘Citizenship’ activity which investigates how to reach out to companies or other stakeholders and who to target in order to effect change. Learners will consider viable strategies for persuading online service providers to revise their standards or the functionality of their platform. Although most young people use social media services, they might not be aware of the fact that they can inform or even influence companies if they can identify a problem or offer valuable feedback. The main activity helps learners not only to identify issues (in line with Sep 1-3) but also to find the right people who have the power to help making the change they are looking for.

In this pathway, the chosen activities help to spot and report online hate speech. When working with young people they might come up with ideas or products that could help to stop online hate speech. In order to implement their products they might need your support for a longer period of time. Try to give them ongoing advice and make use of your own network of potential supporters. You can also help them promote their ideas and use some of the campaigning activities from Pathway 1.