In this article, we look into some attempts at studying the correlation between online hate speech and hate crime, in light of some real-life cases of violence.

In the past years, some researchers and organisations have studied the possible causal links between online hate speech and hate crime. Danielle Keats Citron and Helen L. Norton established in 2011 that, in its most aggressive form, hate speech may encourage – and therefore lead to – abusive, harassing or insulting conduct, including physical violence. In 2016, the European Commission against Racism and Intolerance (ECRI) has defined hate crime as referring to hate speech taking the form of a conduct that is in itself a criminal offence. In 2018, the Anti-Defamation League established that there is a ladder of harm, with many gradations, starting from acts of bias and discrimination, moving up towards bias-motivated violence such as murder, rape, assault or terrorism.

In 2018 as well, a piece of research called "Fanning the Flames of Hate: Social Media and Hate Crime" was published by Karsten Müller and Carlo Schwarz from the University of Warwick. It looks at the link between online hate speech and the occurrence of hate crimes in Germany. The findings show that social media has not only become a fertile soil for the spread of hateful ideas, but also motivates real-life action.

Indeed, the scholars measured the salience of anti-refugee sentiment on the Facebook page of the German populist party "Alternative für Deutschland" (Alternative for Germany, AfD) and compared it with statistics on anti-refugee incidents collected by local advocacy groups. They found that anti-refugee hate crimes disproportionally increase in areas with higher Facebook usage, during periods of high anti-refugee salience. Concretely, for every four anti-refugee Facebook posts, there is one anti-refugee crime. This correlation is particularly strong when it comes to violent incidents against refugees (such as arson and assault).

Even more striking, when the towns and cities studied went through an internet outage, the increase in hate crimes linked to periods of higher anti-refugee salience disappeared. The study does not posit that social media itself is to blame for hate crimes, but rather that it acts as a propagating mechanism for hateful sentiments which originate from deeper issues.

The Christchurch mosque shootings – a recent example

The causal relationship between online hate speech and real-life hate crime has never been so evident since the mass shooting that occurred in Christchurch on 15 March 2019, leaving 50 people dead and dozens others wounded. On 8chan (the now infamous image-board website allowing and promoting hateful comments, memes and images), the shooter posted about his plans and wrote that it was "time to stop shitposting and time to make a real life effort", suggesting that the attack was an extension of his online activity, an attempt to turn online hate into real-world violence. After he put his plans into execution, he was praised by many other 8chan members. This pattern is not new. The authors of some previous mass killings, notably in Northern America (the Quebec mosque in 2017; the Pittsburgh synagogue in 2018; Isla Vista, California, in 2014) had also shared their motivations on online fora like 4chan/pol, Gab, or incel message boards.

Addressing the roots of the problem

Currently, there is a global conversation on online hate speech revolving around gatekeeping: who should be held accountable for hateful content? Should national authorities play a role in regulation? Where do we draw the line between hate speech and freedom of expression?

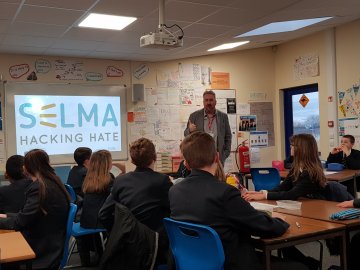

The SELMA partners believe that the problem runs deeper and needs to be addressed through education. We need to discuss hate speech with children and youngsters today to make them understand that certain characteristics from people must be protected from attacks. We must also enhance their ability to recognise and regulate their own emotions, thoughts and behaviours and take the perspective of and empathise with others, including those from diverse backgrounds and cultures.

As the Anti-Defamation League's Pyramid of Hate shows, bias-motivated behaviours grow in complexity, from biased attitudes to acts of bias, discrimination, and even bias-motivated violence. Challenging the problems at the bottom of the pyramid (attitudes and behaviours) allows to interrupt the escalation to the most violent outbursts of hate.

This is exactly what the SELMA project strives to accomplish. The SELMA Toolkit will be a collection of 100 different activities combining Social and Emotional Learning, media literacy and citizenship education. It will enable educators working with young people (aged 11-16) to empower them to engage critically and creatively with the problem of online hate speech, and to become agents of change in their offline and online communities.

The Toolkit will be released on this website in May 2019. Meanwhile, we invite you to join the discussion on the consequences of hate speech on social media – be sure to keep an eye on our Twitter #SELMA_eu hashtag, while following us on Facebook.